EARTOUCH

Facilitating Smartphone Use for Visually Impaired People in Public and Mobile Scenarios

ABSTRACT

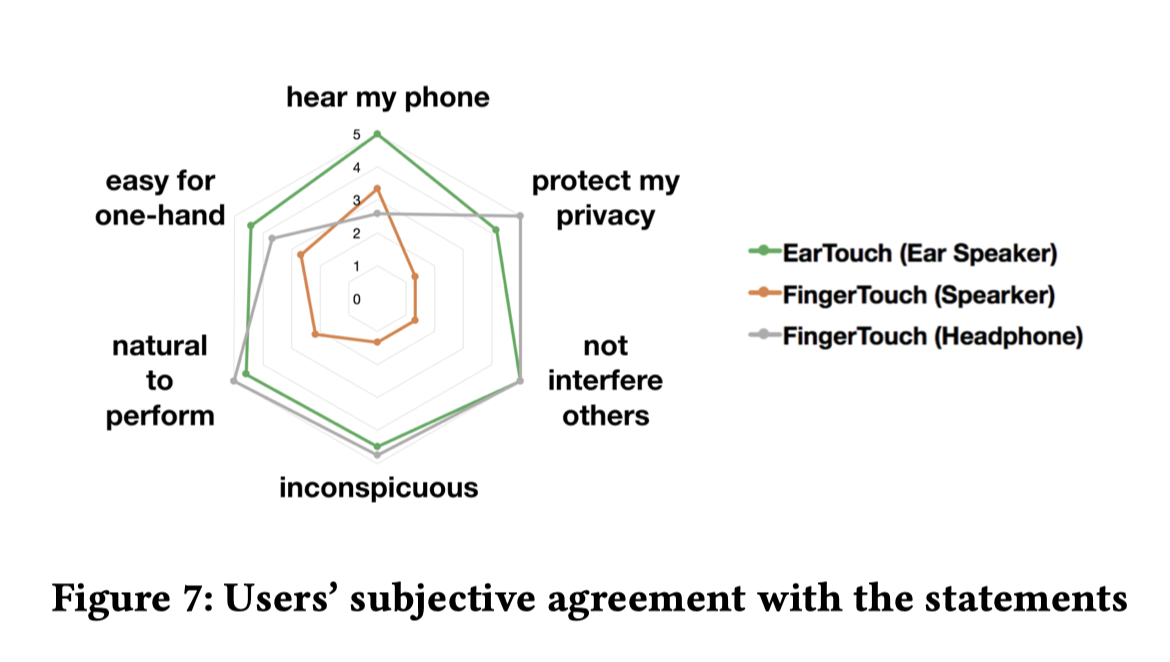

Interacting with a smartphone using touch input and speech output is challenging for visually impaired people in mobile and public scenarios, where only one hand may be available for input (e.g., while holding a cane) and using the loudspeaker for speech output is constrained by environmental noise, privacy, and social concerns. To address these issues, we propose EarTouch, a one-handed interaction technique that allows the users to interact with a smartphone using the ear to perform gestures on the touchscreen. Users hold the phone to their ears and listen to speech output from the ear speaker privately. We report how the technique was designed, implemented, and evaluated through a series of studies. Results show that EarTouch is easy, efficient, fun and socially acceptable to use.

FULL CITATION

Ruolin Wang, Chun Yu, Xing-Dong Yang, Weijie He, and Yuanchun Shi. 2019. EarTouch: Facilitating Smartphone Use for Visually Impaired People in Mobile and Public Scenarios. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ’19). Association for Computing Machinery, New York, NY, USA, Paper 24, 1–13. [DOI]: https://doi.org/10.1145/3290605.3300254

EARTOUCH: FREQUENTLY ASKED QUESTIONS

- What are the challenges to detect ear gestures?

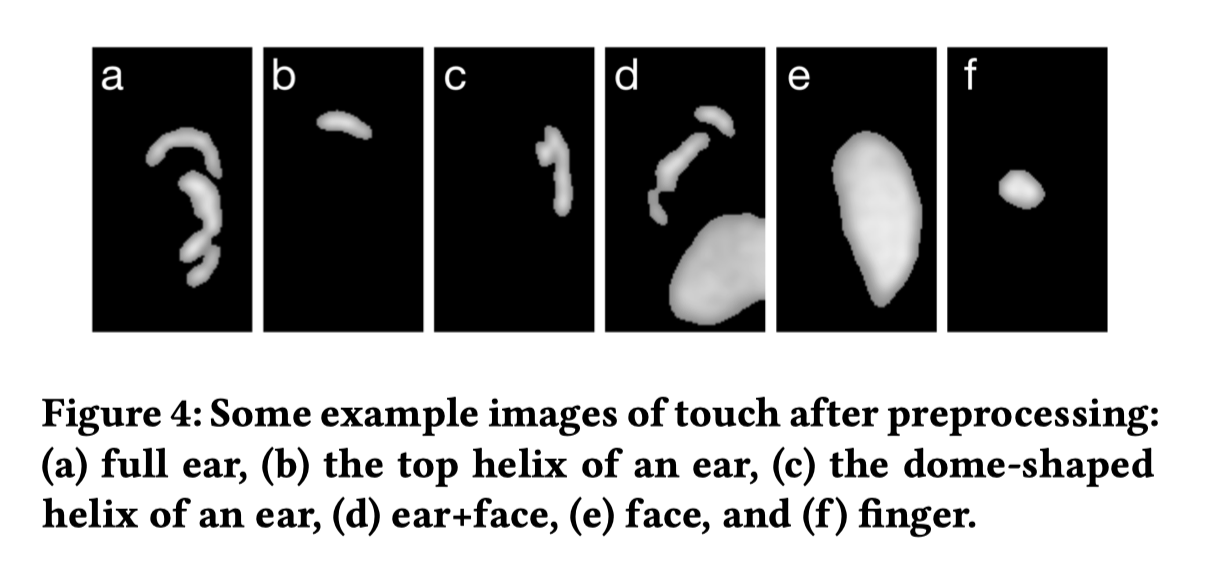

The ear is soft with a complex shape. Therefore, its contact pattern with the touchscreen is complicated and easily deformed, as opposed to the contact pattern of a finger touch, this makes it hard for the phone to track the ear’s movement and gestural input. Additionally, the ergonomics of interaction are reversed: Eartouch gestures has to be performed by moving the device and not the input apparatus (ear). So the design of interaction paradigm cannot be generated by directly leveraging the knowledge that we have about finger-based touch.

- Is there anything special about the ear gestures?

In our participatory design workshop, 23 visually impaired smartphone users contributed many creative and interesting gesture design, e.g., stimulating the pumping out and pushing back operations using ear on the screen to support copy and paste commands; folding the ear on the screen to trigger page turning; etc. However, our goal is to provide an effective supplementary for users to improve one-handed input in public and mobile scenarios. Hence, we need to select the gestures that are acceptable and easy-to-perform to general users. The final gesture set includes eight gestures: Tap, Double Tap, Press, Long Touch, Swipe, Free-Form, Rotational and Continuous Rotation.

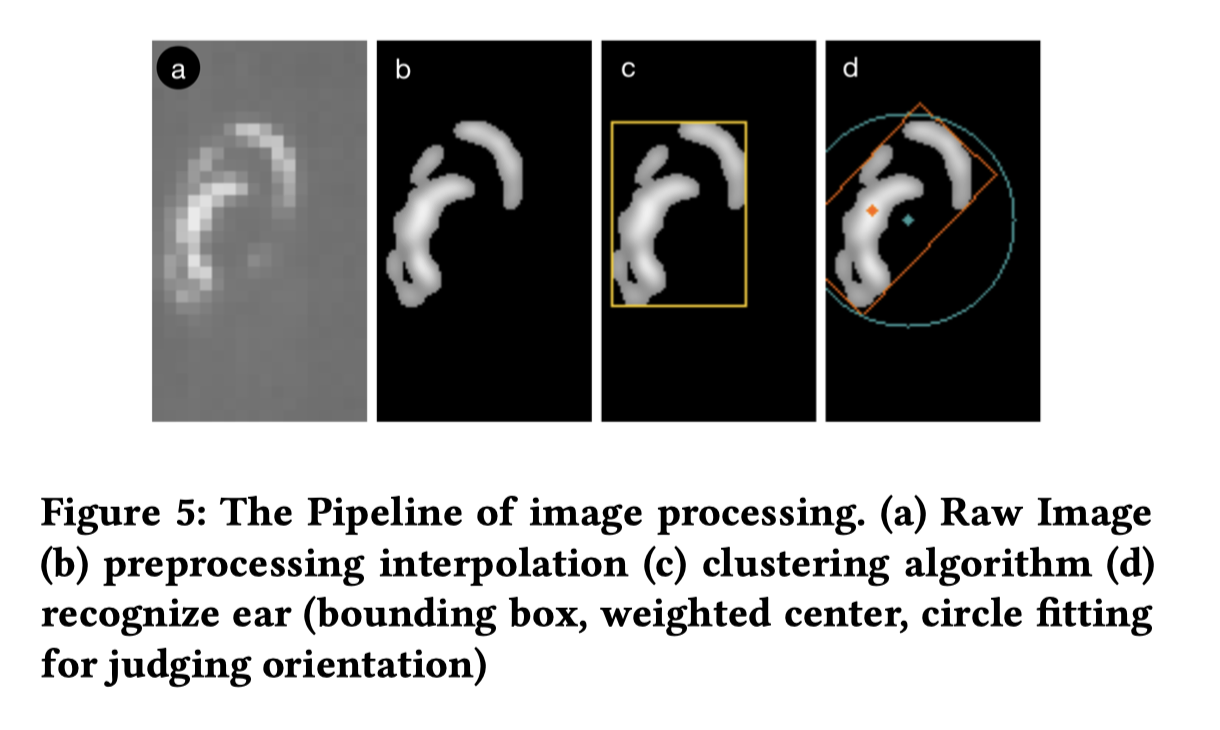

- What is the pipeline of processing capacitive data and classifying the ear gestures?

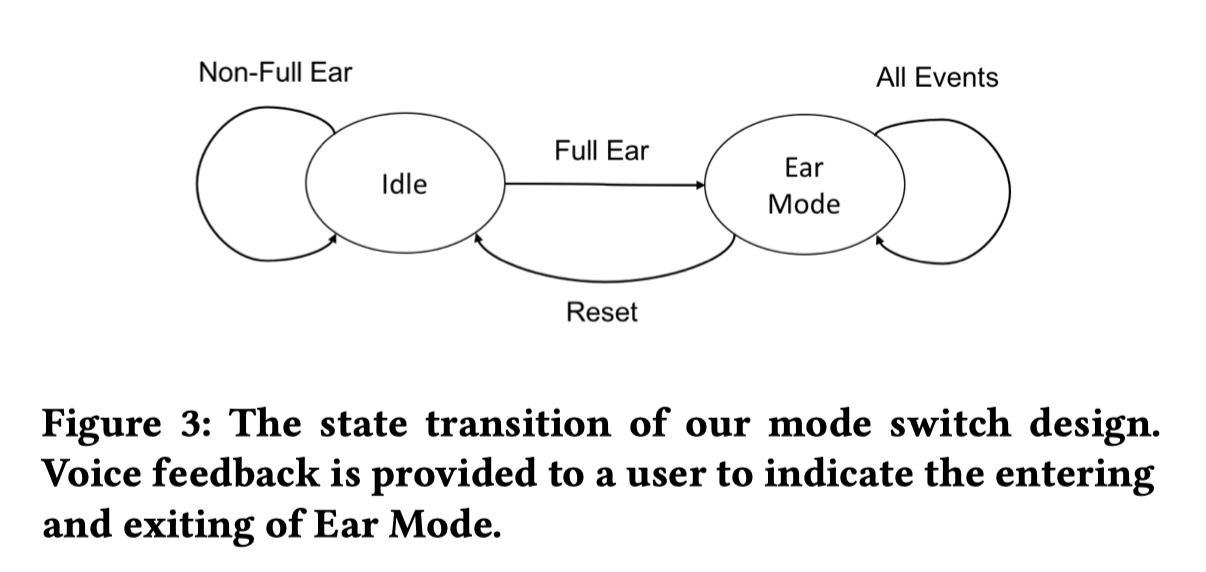

We obtained the capacitive images of the touchscreen from the hardware abstraction layer, and transferred the data to the application layer in 45 Hz using the jni mechanism. Similar to the android touch event lifecycle, we built the ear touch event lifecycle (EarOn, EarMove, EarOff) based on the capacitive data. We track the movement between two EarMove events using an improved KCF (Kernelized Correlation Filter) algorithm, to deal with the deformation of ear. We designed a mode switch technique to support the users enter the Ear Mode by pressing the full ear on the screen and return to the Finger Mode by putting the smartphone back in front of the user. Under the Ear Mode, all the inputs will be treated as ear gestures and classified by a C4.5 decision tree model, which takes into account of time (from EarOn to the present), moving distance and rotation degree. More details in our paper, section 5 and 6.

EARTOUCH: STORIES BEHIND RESEARCH

- This is my first accessibility project for blind and visually impaired people. I made friends with so many talented, intelligent and admirable people. They not only discussed research questions with me, but also inspired me with their tenacity and courage. Among them, Prof. Jia Yang, who was graduated from Harvard Kennedy School and won an Alumni Public Service Award, encouraged me to further my studies abroad.

- During 2017-2018, I went to six blind massage shops, two local community organizations, one special education college and the disabled people's performing art troupe to better understand how our target users live, work, study and relax. I also worked at Beijing Volunteer Service Foundation and the China Braille Library as a volunteer. I also held the first volunteering activity to invite visually and impaired people into Tsinghua University campus.

- I am glad to see that we gradually build the Accessibility Research Team at Tsinghua and we launch more and more research projects in this field. At December 2018, I shared our stories in the annual event of TEDxTHU.

EARTOUCH: Related Projects

- Prevent Unintentional Touches on Smartphone. (2016-2017, contributed to a smarter touch recognition algorithm based on capacitive data)

- SmartTouch: Intelligent Multi-modal Proxy for Visually Impaired Smartphone Users. (2017-2018, contributed to system design and fabrication)