Theme | Assistive Technology

CHI 2021 [DOI]

So much of the language we use to describe a product is centered around vision, which poses a barrier to people who don’t experience the world visually. Inspired by observing how sighted friends help blind people with online shopping, we propose Revamp, a system that leverages customer reviews for interactive information retrieval. We identified four main aspects (color, logo, shape, and size) that are vital for blind and low vision users to understand the visual appearance of a product and formulated syntactic rules to extract review snippets, which were used to generate image descriptions and responses to users’ queries. Revamp also inspired several exciting future directions in accessible information seeking: (i) simplifying and reconstructing the web pages according to users’ current task; (ii) providing coordinated experience of active query and passive reading to support flexible information seeking; (iii) leveraging relative text resources on the web page, such as reviews, to fill in the information gap.

Voicemoji: Emoji Entry Using Voice for Visually Impaired People

CHI 2021 [DOI]

Keyboard-based emoji entry can be challenging for people with visual impairments: users have to sequentially navigate emoji lists using screen readers to find their desired emojis, which is a slow and tedious process. In this work, we explore the design and benefits of emoji entry with speech input, a popular text entry method among people with visual impairments. After conducting interviews to understand blind or low vision (BLV) users’ current emoji input experiences, we developed Voicemoji, which (1) outputs relevant emojis in response to voice commands, and (2) provides context-sensitive emoji suggestions through speech output. We also conducted a multi-stage evaluation study with six BLV participants from the United States and six BLV participants from China, finding that Voicemoji significantly reduced entry time by 91.2% and was preferred by all participants over the Apple iOS keyboard. Based on our findings, we present Voicemoji as a feasible solution for voice-based emoji entry.

LightWrite: Teach Handwriting to The Visually Impaired with A Smartphone

CHI 2021 [DOI]

Learning to write is challenging for blind and low vision (BLV) people because of the lack of visual feedback. Regardless of the drastic advancement of digital technology, handwriting is still an essential part of daily life. Although tools designed for teaching BLV to write exist, many are expensive and require the help of sighted teachers. We propose LightWrite, a low-cost, easy-to-access smartphone application that uses voice-based descriptive instruction and feedback to teach BLV users to write English lowercase letters and Arabian digits in a specifically designed font. A two-stage study with 15 BLV users with little prior writing knowledge shows that LightWrite can successfully teach users to learn handwriting characters in an average of 1.09 minutes for each letter. After initial training and 20-minute daily practice for 5 days, participants were able to write an average of 19.9 out of 26 letters that are recognizable by sighted raters.

PneuFetch: Supporting Blind and Visually Impaired People to Fetch Nearby Object via Light Haptic Cues

CHI 2020 Late Breaking Works [DOI]

We present PneuFetch, a light haptic cues based wearable device that supports blind and visually impaired (BVI) people to fetch nearby objects in an unfamiliar environment. In our design, we generate friendly, non-intrusive, and gentle presses and drags to deliver direction and distance cues on BVI user’s wrist and forearm. As a concept of proof, we discuss our PneuFetch wearable prototype, contrast it with past work, and describe a preliminary user study.

AuxiScope: Improving Awareness of Surroundings for People with Tunnel Vision

UIST 2019 Student Innovation Competition

Tunnel vision is the loss of peripheral vision with retention of central vision, resulting in a constricted circular tunnel like field of vision. By age 65, one in three Americans have some form of vision impairing eye condition and may notice their side or peripheral vision gradually failing. Unfortunately, this loss of peripheral vision can greatly affect a person’s ability to live independently.We propose a robotic assistant for them to locate the objects and navigate to find objects in the environments based on object detection techniques and multimodal feedback.

EarTouch: Facilitating Smartphone Use for Visually Impaired People in Public and Mobile Scenarios

CHI 2019 [DOI]

Interacting with a smartphone using touch and speech output is challenging for visually impaired people in public and mobile scenarios, where only one hand may be available for input (e.g. with the other one holding a cane) and privacy may not be guaranteed when playing speech output using the speakers. We propose EarTouch, an one-handed interaction technique that allows the users to interact with a smartphone touching the ear on the screen. Users hold the smartphone in a talking position and listen to speech output from ear speaker privately. EarTouch also brings us an step closer to the inclusive design for all users who may suffer from situational disabilities.

SENTHESIA: Adding Synesthesia Experience to Photos

2018 GIX Innovation Competition Semi-Finalist

An integrated multi-modal solution for adding synesthesia experience to ordinary food photos. Using natural language processing and visual recognition, SENTHESIA learn from the comments and photos from these websites and more materials online, to extract proper and delicate description from the semantic space, and analysis the key elements of taste and sense experience. Visually impaired users can also benefit from vivid descriptions beyond photos. Proposed and designed the concept, now cooperating with an interdisciplinary team to implement prototype.

SmartTouch: Intelligent Multi-modal Proxy for Visually Impaired Smartphone Users

Tsinghua University 35th, 36th Challenge Cup, Second Prize, 2017-2018

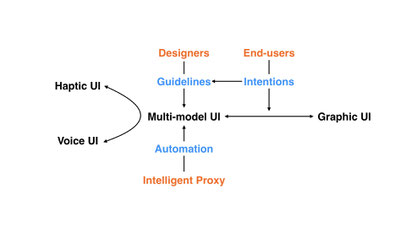

The closest way to natural user interface may be building intelligent proxy which can support multi-modal interactions according to end-users' intentions. We take our first step towards improving the user experience of visually impaired smartphone users. Based on interviews and participatory design activities, we try to explore the proper roles of graphic/haptic/voice UI in this case and establish guidelines for designing multi-model user interface. The intelligent proxy serves as the control center for building the bridge crossing "The Gulf of Execution and Evaluation" and it automatically performed the tasks when it understands the users' intentions.

Theme | Sensing

Facilitating Temporal Synchronous Target Selection through User Behavior Modeling

IMWUT 2019 [DOI]

Temporal synchronous target selection is an association-free selection technique: users select a target by generating signals (e.g., finger taps and hand claps) in sync with its unique temporal pattern. However, classical pattern set design and input recognition algorithm of such techniques did not leverage users' behavioral information, which limits their robustness to imprecise inputs. In this paper, we improve these two key components by modeling users' interaction behavior. We also tested a novel Bayesian, which achieved higher selection accuracy than the Correlation recognizer when the input sequence is short. The informal evaluation results show that the selection technique can be effectively scaled to different modalities and sensing techniques.

Tap-to-Pair: Associating Wireless Devices using Synchronous Tapping

IMWUT 2018 [DOI]

Currently, most wireless devices are associated by selecting the advertiser’s name from a list, which becomes less efficient and often misplaced. We propose a spontaneous device association mechanism that initiates pairing from advertising devices without hardware or firmware modifications. Users can then associate two devices by synchronizing taps on the advertising device with the blinking pattern displayed by the scanning device. We believe that Tap-to-Pair can unlock more possibilities for impromptu interactions in smart spaces.

Prevent Unintentional Touches on Smartphone

2017

When interacting with smartphones, the holding hand may cause unintentional touches on the screen and disturb the interactions, which is annoying for users. We develop capacitive image processing algorithm to identify the patterns of unintentional touches, such as the process of touches "growing up" and the corresponding relationships among touches on the screen etc. Through mechanical and artificial test, our algorithm rejected 96.33% of unintentional touches while rejecting 1.32% of intentional touches.

Theme | Medicine and Health Care

Auxiliary diagnosis System for Epilepsy

In Tsinghua Medical School, 2018

An sEEG intelligent cloud processing system designed to provide a more effective means for epilepsy surgery planning and research on the pathogenesis of epilepsy. Proper visualization of the signal data could help neurosurgeons to better position the lesion and algorithm have the potential to dig out patterns of seizure from original data, which may be difficult for human to find.

AirEx: Portable Air Purification Machine

2016 GIX Innovation Competition First Prize 🏆

A self-contained breathing apparatus which is an effective solution for breathing pure air without wearing a mask. As Design Lead, cooperated with teammates from University of Washington and Tsinghua Universtiy, created a mobile air filtration system for air pollution which provides adjustable assisted inhalation.

Infusion+: Intelligent Infusion System

Undergraduate thesis, 2015

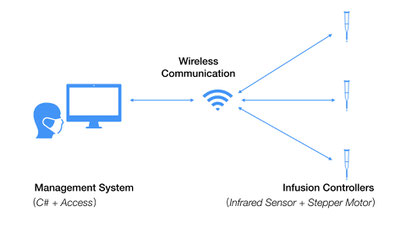

Intravenous infusion is an important part of nursing work and one of the most commonly used medical treatments in clinical treatment. For a long time, most hospitals and medical institutions have relied on manual operations. Our solution includes infusion controllers and management system. Based on infrared sensor and stepper motor, infusion controllers can detect and control the infusion speed. The management system communicate the infusion information (patient, drug, infusion speed, time etc.) with the controllers through wireless.